Protect the Validity of Your Online Survey Data

Fraudulent responses to online surveys are increasing. Bots search the Internet for study recruitment postings, and scammers pretend to be appropriate respondents to get paid for study participation. The result is a corruption of research data, invalidating findings and undermining science.

Our Experience

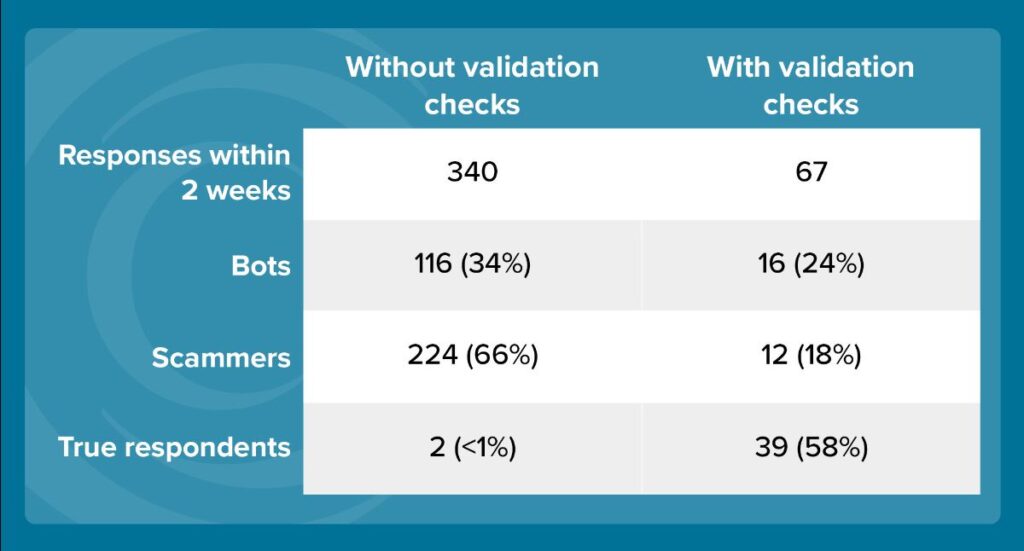

Our own study recruitment efforts have encountered this problem. Recently, we posted a study recruitment flyer across social media. The flyer invited elementary teachers to review and evaluate a website with tools to support social emotional learning (SEL) in schools. We directed individuals interested in participating to complete an online interest survey. Over a 2-week period, 340 interest surveys were submitted. But when we examined the responses, we determined very few were from actual teachers. Most were clearly bots or scammers.

We relaunched recruitment with the same study flyer. This time, we used carefully selected strategies to block bots and scammers, specifically:

- Sending the flyer through our newsletter mailing list and those of partners in a similar field, making sure not to post to social media.

- Using CAPTCHA to decrease bots’ ability to enter the interest survey at all.

- Using a second graphical screener question that exited respondents from the survey if their answers were incorrect. Respondents could move forward only if they answered with the correct number (5). Blank or incorrect numbers ended the survey. For our survey, we used a JPEG of an equation.

- Setting up validation criteria for email and phone number questions. For example, the survey system made sure phone numbers used 10 numeric digits. If the phone number had an incorrect number of digits, the respondent couldn’t move forward in the survey.

- Requiring a work email address to proceed with the survey.

- Asking open-ended questions to screen respondents. These questions were easy for teachers to answer but difficult for scammers to fake good answers. For example, we asked respondents to describe an SEL issue they’ve faced with students.

- Requiring a physical mailing address to receive incentives and not offering incentive payments over email.

After a 2-week period, 67 interest surveys were submitted. Of the 67 respondents who made it past CAPTCHA, 16 were likely bots—their answers to the simple math question were incorrect. Of those who answered the math question correctly, 12 exited the survey when required to provide a work email and phone number. A total of 39 respondents completed the full interest survey. We examined their responses to the open-ended questions, searched the web to confirm the information they entered, and conducted phone interviews. In the end, we confirmed all 39—or 100 percent—were true respondents who qualified to participate in the study.

Our Lessons Learned

What did we learn?

- Simply using CAPTCHA went a long way toward decreasing the total number of fraudulent responses. Adding a second graphical question effectively blocked the handful of bots that were able to pass CAPTCHA. As a result, the noise in our potential sample pool vastly decreased.

- Requiring a work email address and using automatic validation checks for emails and phone numbers essentially eliminated scammers. Although some people who exited the survey after these questions may have been real teachers who elected not to proceed, the benefit of these strategies outweighed the costs: the strategies removed all scammers from the sample pool.

- Using these screening questions and validation strategies greatly decreased the amount of time needed to validate potential participants. Without built-in validation checks, one of our researchers had to review 340 respondents to weed out fraudulent responses, such as the 95 respondents who entered (XXX) XXX-XXXX for their phone number and the 110 who spent less than 1 minute filling out the survey.

It takes considerable time and attention to detect scammers. Although we received fewer total responses after implementing these built-in validation checks, we actually achieved a significantly higher number of true respondents, and we did it more efficiently. Here’s what matters most: we can feel confident in the validity of the data we collected.

Here's what matters most: we can feel confident in the validity of the data we collected.

How to Identify Fraudulent Responses

How can you spot fraudulent responses? Look for these clues:

- Generic email addresses (especially Gmail, Yahoo, and Hotmail)

- Email addresses that are clearly different from respondents’ names (e.g., [email protected] for Joe Webster or [email protected] for Caroline Randolph)

- Incorrectly formatted phone numbers, such as (XXX) XXX-XXXX

- Fake school names, such as Bedford or Kirby School

- Real school names but the respondent isn’t affiliated with the school (identified by searching for staff names on school websites)

- Time spent completing the survey is significantly lower than researchers would expect (e.g., spending 30 seconds to complete 12 items)

How to Recruit Online

Our strategies can help you make sure your data is real:

- Use CAPTCHA

- Use a graphical screener question

- Use validation requirements for email addresses and phone numbers

- Require a work email and physical address

- Ask open-ended questions tailored to your audience